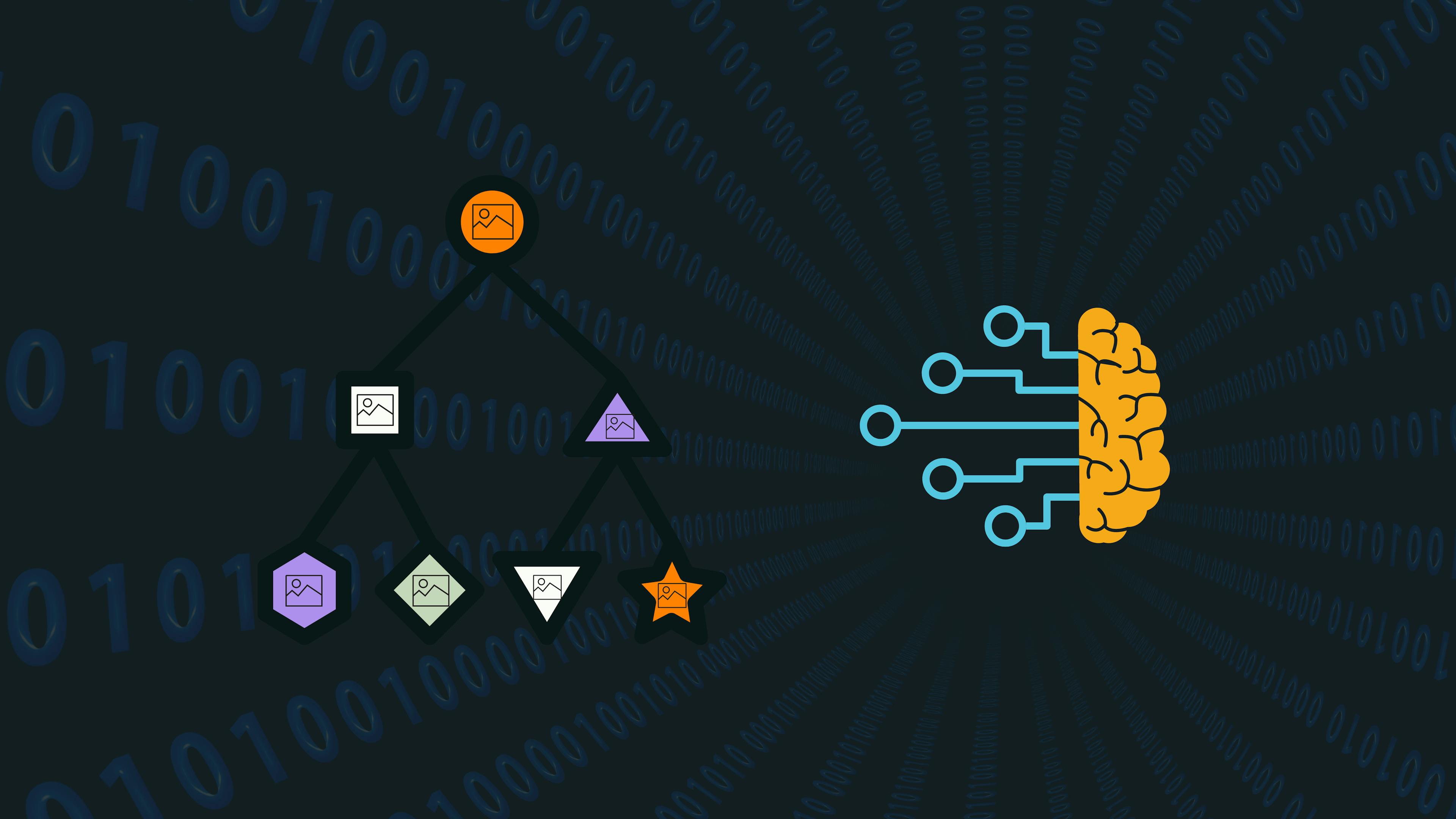

Understanding Classification in Machine Learning

by

September 17th, 2025

Audio Presented by

Survived regression to mean, lives by the motto 'novels are coffee, poems are latte'

About Author

Survived regression to mean, lives by the motto 'novels are coffee, poems are latte'